Autonomous Vehicles Don’t Fail Because of Algorithms They Fail Because of Data

Autonomous vehicles are often discussed in terms of advanced sensors, powerful GPUs, and cutting-edge algorithms. But in real-world deployments, failures rarely happen because a model is mathematically weak. They happen because the model misunderstands the environment.

And that misunderstanding almost always traces back to one thing: data annotation.

Before an autonomous system can make decisions, it must first perceive the world correctly. That perception is entirely dependent on how well raw sensor data is labeled, structured, and validated. This is where AI data annotation services play a critical role. In autonomous driving, annotation is not a supporting step, it is the foundation.

From Raw Sensors to Machine Understanding

An autonomous vehicle does not see roads, pedestrians, or traffic signals the way humans do.

It sees:

- Raw pixels from cameras

- Dense point clouds from LiDAR

- Motion and velocity signals from radar

- Continuous streams of video frames

On their own, these inputs carry no meaning. Annotation converts this raw data into structured ground truth, allowing models to learn what exists in the scene, where it is, and how it behaves over time.

Without accurate image labelling and validation, even the most advanced perception models struggle in real-world driving conditions.

What Autonomous Annotation Really Involves

Autonomous annotation is not a single task. It is a combination of multiple annotation techniques that work together to support perception, prediction, and planning modules.

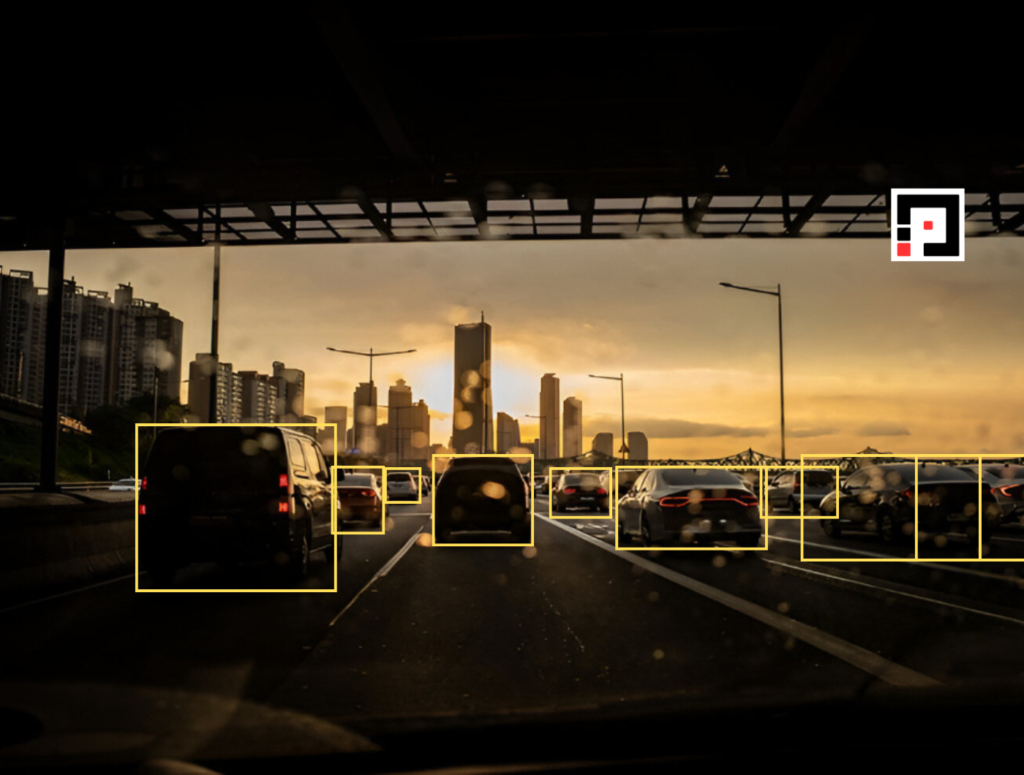

Bounding Boxes: Identifying Objects in the Scene

Bounding box annotation is used to localize objects such as vehicles, pedestrians, cyclists, traffic signs, and signals. It answers a basic but critical question: what objects are present and where are they located?

For any image annotation company in India working with autonomous datasets, consistency and accuracy at this stage are essential. Poorly aligned boxes or inconsistent class definitions directly impact object detection performance.

Segmentation: Pixel-Level Understanding of the Environment

While bounding boxes identify objects, segmentation explains the scene at a much deeper level.

Image segmentation enables autonomous systems to understand:

- Drivable vs non-drivable areas

- Road boundaries and lanes

- Sidewalks, curbs, and shoulders

- Objects that occupy or block space

At Pixel Annotation, segmentation is handled as pixel-level object detection, where precision matters at the smallest scale.

As part of our image segmentation annotation service, we deliver:

- Semantic segmentation services for scene understanding

- Segmentic annotation for structured environment labeling

- High-precision polygon annotation service for complex boundaries

Points We Consider During Segmentation Annotation

- We do not leave a single pixel unannotated

- Every pixel is assigned to the correct class

- Object boundaries are refined with extreme care

- Transitions between regions are handled precisely

- Complex areas such as road–curb edges, vegetation, shadows, and mixed surfaces are annotated in detail

This approach is critical for AI image segmentation services in India, where autonomous models demand high-fidelity data. Even small segmentation errors can result in incorrect path planning or unsafe navigation decisions.

Our Image Segmentation Services in India are designed to meet the precision requirements of safety-critical autonomous applications.

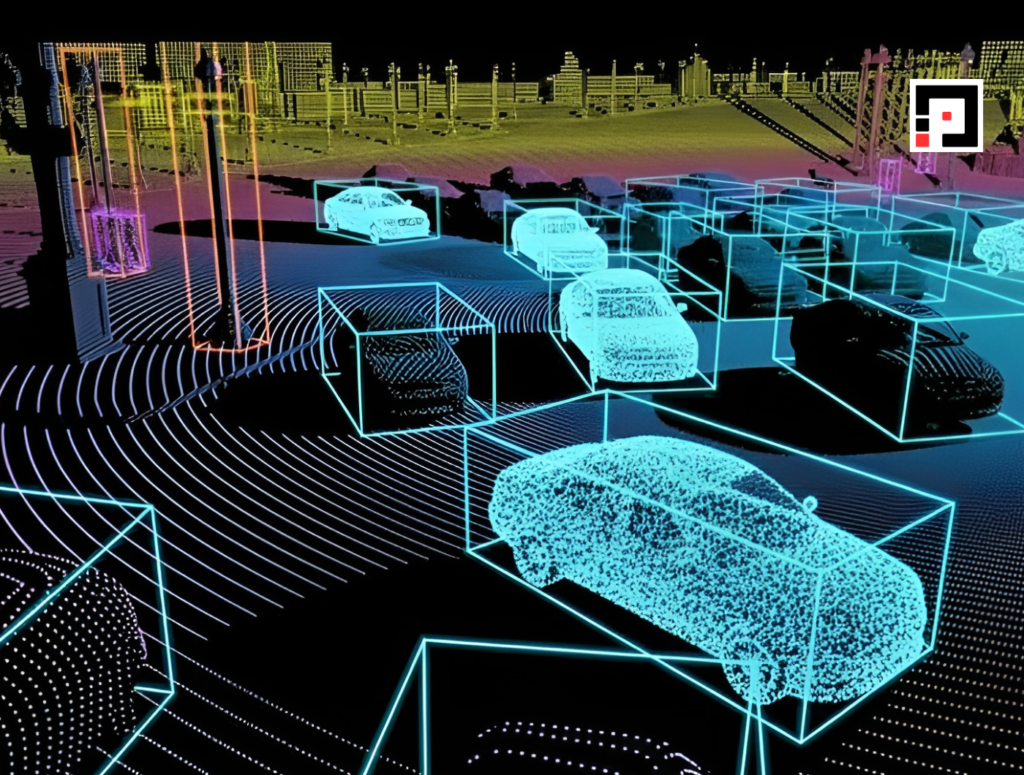

3D Annotation: Adding Depth and Spatial Awareness

Camera images alone cannot provide accurate distance or scale information. Autonomous systems rely on 3D annotation using LiDAR data to understand spatial relationships.

3D cuboidal annotation captures:

- Object dimensions

- Orientation in space

- Distance from the vehicle

This spatial awareness is essential for collision avoidance, lane merging, and speed control making it a core part of advanced AI data annotation services for autonomous mobility.

Why Manual Annotation Is Still Critical for Autonomous Vehicles

Automation and model-assisted labeling can improve speed, but they cannot replace human precision, especially in autonomous driving where the margin for error is extremely small.

No model is 100% accurate. Automated systems may miss partially visible pedestrians, mislabel complex boundaries, or fail in rare edge cases. This is why manual annotation remains essential.

At Pixel Annotation, all datasets are annotated by trained human annotators and reviewed through a dedicated quality assurance process, where each and every annotation is carefully validated.

Consider a real-world scenario:

If a model skips labeling a pedestrian due to occlusion or poor lighting, the model trained on that data may fail to detect similar pedestrians in real traffic. In autonomous systems, this is not a minor error, it can result in incorrect perception, delayed response, or unsafe outcomes.

This is where experienced human annotators and QA workflows demonstrate their value. Manual annotation ensures critical objects are never overlooked, even in complex or ambiguous scenes.

Why Annotation Quality Directly Impacts Safety

Every perception module — object detection, segmentation, tracking, and prediction — depends on accurate ground truth data.

Poor annotation leads to:

- Missed detections

- False positives

- Incorrect distance estimation

- Unreliable predictions

High-quality annotation creates models that generalize better and behave more predictably in real-world environments.

Pricing Model for Autonomous Vehicle Annotation

Our pricing for autonomous vehicle annotation is per annotation, not one-size-fits-all.

The cost depends on:

- Annotation type (bounding boxes, segmentation, 3D, video tracking)

- Scene complexity

- Level of precision and quality required

- Dataset volume and edge-case density

This flexible approach ensures clients pay based on the actual annotation effort and quality level needed, rather than a generic flat rate.

Conclusion

Autonomous driving is not enabled by algorithms alone. It is enabled by accurately labeled, carefully validated data.

As an experienced image annotation company in India offering end-to-end AI data annotation services, Pixel Annotation focuses on precision, scalability, and quality, especially for safety-critical use cases like autonomous vehicles.

From AI image segmentation services in India to large-scale autonomous datasets, we approach annotation as a responsibility, not just a service.